Have you ever wondered what makes Michele Banks tick? Nature Microbiology did. So, they asked her. You can read their interview with Michele here and gaze upon her lovely artwork for their homepage here.

Nature Microbiology: When did you first become exposed to scientific images?

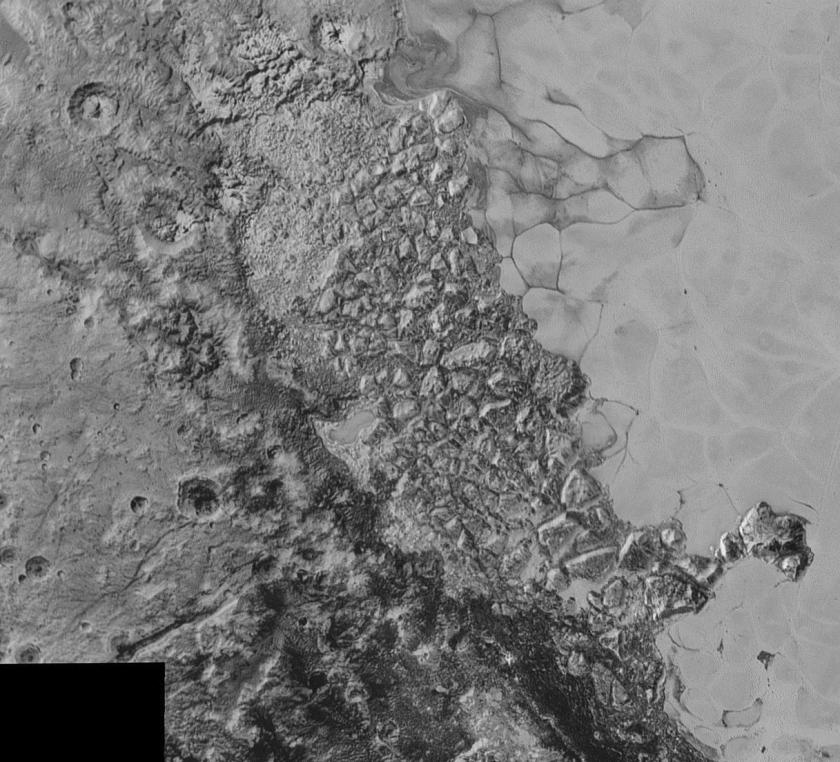

Michele Banks: I started doing watercolours about 15 years ago. I was mainly working in pure abstraction, just playing with colour and with the properties of the paint. One of the things I love to do is wet-in-wet technique, which gives a ‘bleeding’ effect. I showed some of my wet-in-wet work at the Children’s National Medical Center here in Washington DC about 10 years ago, and they told me they liked my work because it looked like things under a microscope.

We hope the interest in the overlap of science and art will be a theme that continues throughout future Nature Microbiology issues – also open access, gender balance in publishing, shying away from bogus impact factors. etc. etc…